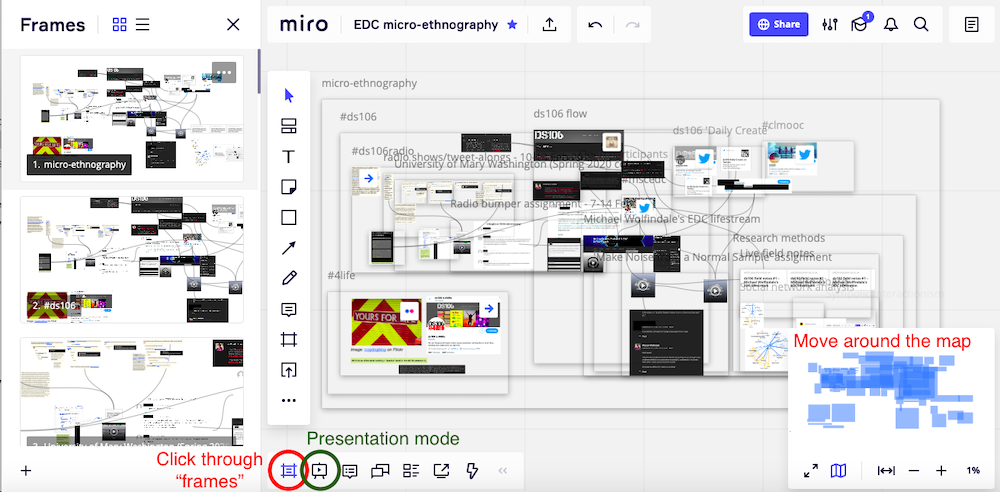

As I disentangle myself from my lifestream feeds, and reflect on the course, I consider how I have perceived and been influenced by the algorithmic systems involved.

Google and Twitter were consistent influences, the latter through new/existing connections and via #mscedc, #AlgorithmsForHer and #ds106, and I saved/favourited (often highly ranked) resources to Pocket, YouTube and SoundCloud (and other feeds).

While I had some awareness of these algorithms, alterations to my perception of the ‘notion of an algorithm’ (Beer 2017: 7) has shaped my behaviour. Believing I “understand” how Google “works”, reading about the Twitter algorithm and reflecting on ranking/ordering have altered my perceptions, and reading about ‘learning as “nudging”‘ (Knox et al. 2020: 38) made me think twice before accepting the limiting recommendations presented to me.

Referring to the readings, these algorithmic operations are interwoven with, and cannot be separated from, the social context, in terms of commercial interests involved in their design and production, how they are ‘lived with’ and the way this recursively informs their design (Beer 2017: 4). Furthermore, our identities shape media but media also shapes our identities (Pariser 2011). Since ‘there are people behind big data’ (Williamson 2017: x-xi), I am keen to ‘unpack the full socio-technical assemblage’ (Kitchin 2017: 25), uncover ideologies, commercial and political agendas (Williamson 2017: 3) and understand the ‘algorithmic life’ (Amoore and Piotukh 2015) and ‘algorithmic culture’ (Striphas 2015) involved.

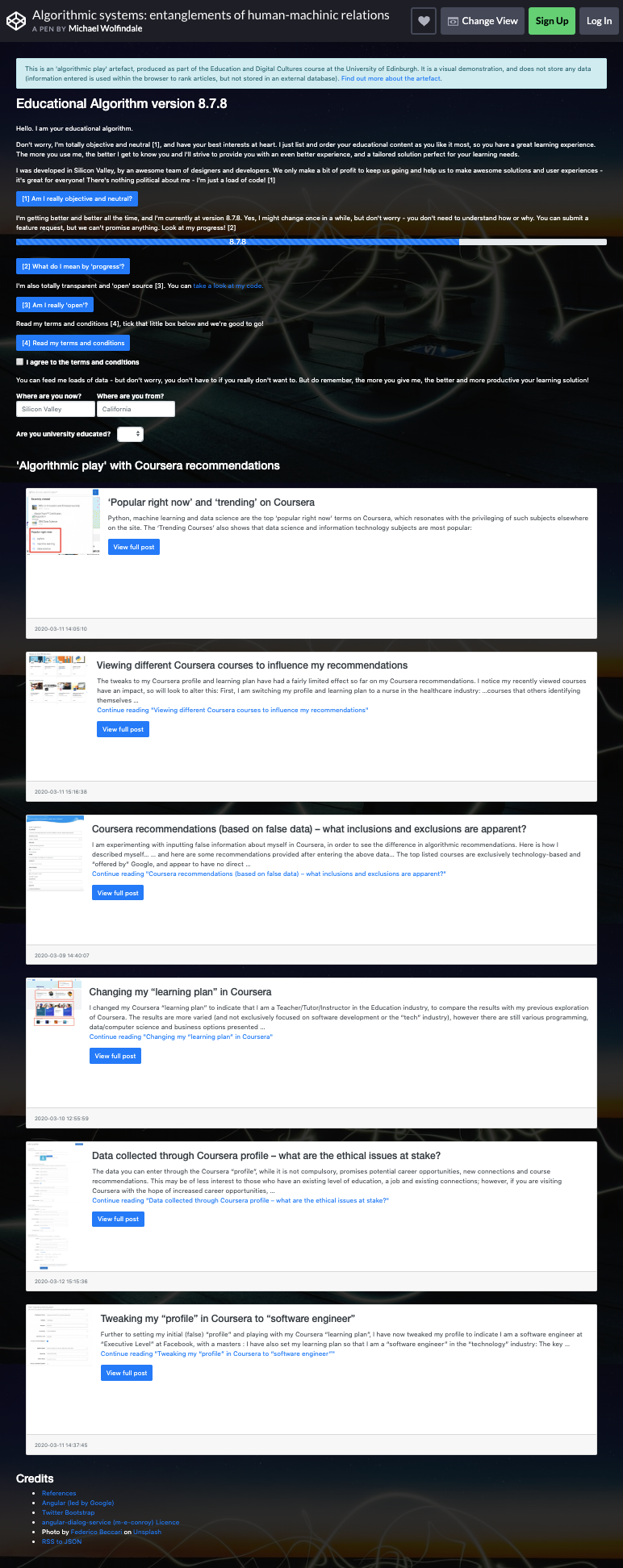

During my ‘algorithmic play’ with Coursera, its “transformational” “learning experiences” and self-directed predefined ‘learning plans’ perhaps exemplify Biesta’s (2005) ‘learnification’. Since ‘algorithms are inevitably modelled on visions of the social world’ (Beer 2017: 4), suggesting education needs “transforming” and (implied through Coursera’s dominance of “tech courses”) ‘the solution is in the hands of software developers’ (Williamson 2017: 3) exposes a ‘technological solutionism’ (Morozov 2013) and Californian ideology (Barbrook and Cameron 1995) common to many algorithms entangled in my lifestream. Moreover, these data-intensive practices and interventions, tending towards ‘machine behaviourism’ (Knox et al. 2020), could profoundly shape notions of learning and teaching.

As I consider questions of power with regards to algorithmic systems (Beer 2017: 11) and the possibilities for resistance, educational institutions accept commercial “EdTech solutions” designed to “rescue” them during the coronavirus crisis. This accelerated ‘datafication’ of education, seen in context of wider neoliberal agendas, highlights a growing urgency to critically examine changes to pedagogy, assessment and curriculum (Williamson 2017: 6).

However, issues of authorship, responsibility and agency are complex, for algorithmic systems are works of ‘collective authorship’, ‘massive, networked [boxes] with hundreds of hands reaching into them’ (Seaver 2013: 8-10). As ‘processes of “datafication” continue to expand and…data feeds-back into people’s lives in different ways’ (Kennedy et al. 2015: 4), I return to the concept of ‘feedback loops’ questioning the ‘boundaries of the autonomous subject’ (Hayles 1999: 2). If human-machinic boundaries are blurred and autonomous will problematic (ibid.: 288), we might consider algorithmic systems/actions in terms of ‘human-machinic cognitive relations’ (Amoore 2019: 7) or ‘cognitive assemblages’ (Hayles 2017), entangled intra-relations seen in context of sociomaterial assemblages and performative in nature (Barad 2007; Introna 2016; Butler 1990) – an ‘entanglement of agencies’ (Knox 2015).

I close with an audio/visual snippet and a soundtrack to my EDC journey…

Great artefact, Adrienne, and really like the annotated screencast format – works brilliantly with the subject matter!

Fascinating (and perhaps sobering!) how you pinned down aspects which were influenced by activity outside of your Instagram account, such as your search history. Reminds me of a quote from Seaver (2013: 10) I came across which builds on the “black box metaphor” to argue that ‘these algorithmic systems are not standalone little boxes, but massive, networked ones with hundreds of hands reaching into them’:

Seaver, N., 2013. Knowing Algorithms. Presented at the Media in Transitions 8, Cambridge, MA. Available from: https://static1.squarespace.com/static/55eb004ee4b0518639d59d9b/t/55ece1bfe4b030b2e8302e1e/1441587647177/seaverMiT8.pdf [Accessed 11 Mar 2020].

It’s great that you’ve discussed multiple algorithmic systems and the wider context in that sense since, as you also note, it is all too often the case that a handful of big tech companies own many of these different “apps” (such as Facebook owning Instagram). As you draw on from Williamson (2017), the business models, entrepreneurial cultures and commercial and political agendas are all a huge factor here. The Silicon Valley model and associated ideologies are aspects that also came to the fore in my brief “play” with Coursera. Really clear and thorough conclusions here!

Great work – really enjoyed it!